Apologies for the delay in getting this post out. Having focused in previous posts on primary research, this post will focus more on secondary research. I aim to introduce what systematic reviews are, why do one, how to do one and most importantly how to read one. As always, comments, critique and discussion welcome!

What is a Systematic Review?

A systematic review (SR) is a scientific tool which can be used to summarise, appraise and communicate the results and implications of otherwise unmanageable quantities of research. A systematic review is different from a review in the sense that, a review is often narrative with the aim to synthesise results of some publications on a topic whilst an SR attempts to comprehensively identify all literature on a given topic. Reviews are subject to criticism because of their high risk of bias due to their freedom of selection and thus the validity of the findings is often questioned.

Why conduct a Systematic Review?

A SR allows for a comprehensive analysis of literature on a given topic that takes quality into consideration. In doing so, a SR provides more precise conclusions by synthesising the results of a number of smaller studies; note that SR provide more precision not more power, power relates to primary research. This increased precision in turn allows for enhanced confidence in the effectiveness/reliability/validity of the item under investigation. Notice how I didn’t just say effectiveness? SR were historically only undertaken with regard to the effectiveness of an intervention however it is now recognised that SR can be performed for almost any primary research design such as reliability, validity, adherence, risk factors to name but a few.

Advantages of SR (Greenhalgh 2010)

- Explicit methods used limit bias in study selection.

- Large amount of information identified and synthesised.

- Therefore, conclusions are more reliable & accurate.

- Different studies formally compared to establish generalisability and consistency

OR

- Reasons for inconsistency identified and new hypothesis formulated.

- With meta-analysis, precision of the overall result is increased.

It is clear that there are very good reasons as to why SR should be conducted and there are some strong advantages. As clinicians they should be our first port of call and the reason why should be quite obvious – they’ve done all the hard work for you! The literature has been searched, the literature has been appraised, the literature has been summarised and the findings have been synthesised so you don’t have to!

Not only do SR answer questions, they also highlight gaps in current knowledge and formulate new hypotheses. When proposing a new trial a SR is initially conducted to demonstrate the current limitations of practice or understanding and in turn justify the need for the trial. They are therefore useful not only for clinical purposes but serve a research role also.

SR sit quite smugly at the top of all hierarchies of evidence and when done well, quite rightly so. I’ll come on to how to determine whether or not a SR is done well in a short while but I’ll first highlight a few limitations.

Limitations of SR

- Publication Bias (Easterbrook et al. 1991)

- Poor quality of primary studies limit the review (Liberati 2001).

- Heterogeneity of Primary Studies

- Combining statistics is complex in the absence of homogeneity.

Publication Bias is a bias with regard to what is likely to be published, among what is available to be published. Studies that demonstrated statistically significant results were more than twice as likely to be published that those finding no difference between the study groups; furthermore those with statistically significant results were more likely to be published in journals with a high citation impact factor. Impact factor relates to the average number of citations to recent articles published in that journal, the higher the impact factor the more important that journal is regarded.

Physiotherapy Journal 1.49, BMJ 17.21 – I’ll leave you to make your own conclusions from that…

It has been shown the trials of higher quality are less likely to demonstrate positive outcomes whilst trials of poorer quality are more likely to demonstrate positive outcomes; therefore if the quality of the primary studies included in the review are poor, then this reduces confidence in the results, and produces weak conclusions even when the results are consistent.

Now, the authors of the SR should do this for you and demonstrate their appraisal of the quality of the included trials and what impact this has on the strength of the evidence. You may have seen in the past things like, conflicting, limited, moderate evidence etc. More recent systematic reviews are using the GRADE whereby the authors are trying to define the risk of bias and in turn take this is into consideration when summarising the findings of the review.

Methodology

There are 10 stages to conducting a good systematic review:

- Starting out

- The protocol

- Source identification

- Search strategy

- Screening the literature

- Data extraction

- Quality assessment

- Synthesis

- Results

- Summary

1 – Starting Out

As with any research, a SR starts with an idea. A survey of the literature is then required to check that the idea is both necessary and feasible – checking whether it has been done before and are there enough studies that can be included in the review.

From this a well-defined research question needs to be created using a PICO/PIOS framework.

– Population or Problem

– Intervention/Comparator

– Outcomes

– Study Design

2- The Protocol

As with any research, the method of data collection needs to be determined prior to commencing the research for both ethical and methodological reasons. If the review protocol isn’t established apriori and in turn followed, bias can be introduced into the review limiting its conclusions. The protocol should describe the following:

- Sources of Literature

- Search Strategy

- Inclusion/Exclusion Criteria (PIOS)

- Screening Strategy

- Quality Assessment Tool (Predetermined criteria to be used which should then be operationalised)

- Data extraction method

- Data synthesis method (Qualitative or Quantitative Synthesis? i.e. Narrative or Meta-Analysis)

With regard to operationalising the method, this should be conducted for the quality assessment of the included primary studies, the data extraction method as well as the outcomes to allow for comparison. Operationalise refers to defining a variable in a way that it is measurable. For example if looking at function in LBP, primary studies may have used the Oswestry Disability Index (scored out of 100) or the Roland-Morris Disability Questionnaire (scored out of 24). A predetermined way to operationalise the outcomes could be to multiply all RMDQ scores by 3.6 to allow comparison between studies for example.

3- Source Identification

Pragmatically not all electronic databases need to be searched, only those most pivotal to the review topic. Medline produces the most hits when searching and I therefore recommend the inclusion of Medline in most search strategies. Physiotherapy Evidence Database (PEDro) offers the advantage of the study already being rated for you however, only includes intervention studies on its database; if you are undertaking a SR on the reliability of the a classification system, clearly PEDro doesn’t need to be searched.

You may read search strategies that have limited the timespan of the review, this shouldn’t really occur unless the intervention was only introduced in a certain year e.g. if you were reviewing cognitive functional therapy you wouldn’t receive any hits of papers from the 1990s as it is a relatively contemporary management approach. It isn’t good practice to limit the data being included in the review unless there is a technical reason, otherwise it is purely arbitrary, a limit of the search should however be given e.g. papers until November 2013.

There is some debate with regard to SR as to whether you can limit the timespan of a review to the year when the last SR was undertaken. A SR looking at the effectiveness of PRP for long bone healing was conducted in 2012, to update this review in 2014 the authors could limit their search to papers published after their original SR. I would argue that they should run the full search again and include all available literature in keeping with the definition and purpose of the review.

Snowballing, the process whereby the reference lists of included papers are searched should be undertaking, especially of the more recent literature or other similar reviews or hand searching of specialist journals creates a comprehensive search strategy. Should grey literature be sought? The inclusion of grey literature creates a complete data set however does introduce some limitations to the review methodology in the sense that it isn’t reproducible and thus introduces bias as well it not being peer reviewed and in turn potentially being poor quality.

Trials that have been registered could also be searched by using sources such as Current Controlled Trials, this data may not have been published however there will usually be a lead author identified who can be contact and may provide the data. If they don’t release this information to you, this can still be mentioned in the write up.

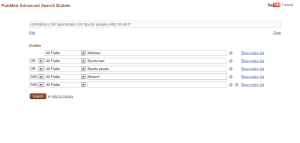

Search Strategy

I’ve written about literature searching before here. Search terms should be devised to identify the literature to allow the research question to be answered; using the PICO/PIOS frameworks can aid this. Incorporating Boolean logic into the search strategy is essential to increase the sensitivity and efficacy of the search.

OR is used between synonyms

AND is used to combine other terms.

The search strategy can be piloted or ‘test driven’ to determine whether it is comprehensive enough and in turn allow it to be revised if appropriate, for example if you know some papers that are in existence on the topic have been missed. Despite even the most robust search strategy, you will inevitably receive some hits on your search that have no relevance to your topic what so ever! This can be limited by using the ‘filters’ on the database such as study design, human, timespan (if relevant) etc.

At this stage in the review process liaising with the University librarians can be a God send!

Screening the Literature

Pre-defined inclusion/exclusion criteria should be used to filter the literature returned from your search, the process for filtering your search results should be described clearly in the protocol.

Abstract and Tiles are usually checked first to see whether the studies are relevant, potentially relevant, irrelevant or unclear due to insufficient detail. Those papers not excluded at this stage are then obtained in full text before having the full inclusion/exclusion criteria applied to them.

Whilst abstracts only and conference proceedings are legitimate reasons for exclusion due to the lack of detail, ‘English Language Only’ shouldn’t be an exclusion criterion. Globalisation makes it possible for research groups in one country to liaise with groups in other countries for papers to be translated as necessary and in turn limiting language bias.

This process can be summarised as such:

Check Abstracts

Exclude those completely inappropriate and keep those that look appropriate as well as studies that you aren’t sure about or have insufficient detail to make a decision and then obtain full text.

Review Full Text against the Inclusion/Exclusion Criteria

Exclude inappropriate articles and keep those that are relevant.

This stage (as well as data extraction and quality assessment) is usually conducted by two reviewers as misunderstandings and misinterpretations do occur when extracting data or making a judgement on the quality of included studies; some even include a kappa value to show reliability between those involved which adds to the transparency of the data collection and review process, improving the trustworthiness of the review.

Data Extraction

Develop a data extraction form, pilot it and then make necessary changes; standardised forms are available which make this process easier.

The information to be extracted can include the following:

- Study Design

- Sample Size per trial arm

- Participant Characteristics

- Description of Intervention

- Setting

- Outcome Measures

- Follow Up

- Attrition and Missing Data

- Results – Binary and Continuous Data for all outcomes

- Author conclusions

This process as mentioned above should be carried out by two reviewers independently; usually one reviewer undertakes the process with the second reviewer then checking.

Quality Assessment

Pre-defined quality criteria exists for the majority of study designs and therefore it usual for these published critical appraisal checklists to be utilised for determining the quality of included studies, such as the PEDro scale or the Cochrane Risk of Bias tool.

When conducting a review it may be appropriate to operationalise the checklist so it is clear how to interpret the criteria. For example, “is there long term follow up?” could be defined to give a time period. This in turn should allow a standardised assessment of quality. If possible get your interpretation checked by a fellow researcher; this process can be enhanced by calculating a kappa coefficient as detailed previously.

Synthesis

To synthesise the results of studies, pre-defined approaches can be used also, such as the Levels of Evidence system. However, a decision needs to be made as to whether or not to undertake a quantitative or qualitative synthesis. The Levels of Evidence approach or a GRADE approach are regarded as a narrative or qualitative synthesis.

Levels of Evidence

Strong – consistent findings among multiple high-quality RCTs

Moderate – consistent findings among multiple low-quality RCTs and/or controlled clinical trials (CCT) and/or one high quality RCT.

Limited – One low-quality RCT and/or CCT

Conflicting – Inconsistent finding among trials (RCTs or CCTs)

No evidence – no trials.

This approach can also be operationalised e.g. What consists multiple? Or words could be changed if you are systematic reviewing the reliability or diagnostic validity of an orthopaedic test as opposed to an intervention where you’d be unlikely to find RCTs/CCTs. A ‘GRADE’ approach is more commonly used in the current literature in attempt to define the risk of bias as opposed to the level of quality (a higher risk of bias is associated with a lower quality paper and vice versa); such an approach makes it easier for the quality of the included studies to be taken into account when summarising the results.

A meta-analysis increases the precision of estimates of a treatment effect, it is a statistical analysis of data from a number of studies to synthesise results. A meta-analysis can be regarded as a quantitative synthesis as a single numerical statistic is created, there are however criteria that need to be satisfied, if these criteria are not fulfilled and a meta-analysis is not indicated, a qualitative or narrative synthesis should be conducted.

Criteria:

- Are interventions, populations receiving interventions and outcomes sufficiently similar across studies?

- Can results be expressed as a single measure of effect as a numerical value?

- Does it make clinical sense to combine results into a single estimate?

The process of a meta-analysis is the same as for a systematic review, however the outcome data is presented differently. Individual results are presented using a Forest Plot as mean effect sizes with 95% confidence intervals on either side of a line of ‘no effect’. The line of no effect is between ‘favours treatment’ and ‘favours control’.

Presentation of Results

The main features of each included study should be presented, such as patient demographics, sample size, control group and outcomes; the outcomes are dependent on the study and the review question e.g. 95% CI, P-Value, Kappa Coefficient, sensitivity/specificity etc. There is usually a text commentary on the quality of the included studies, the main outcomes as well as a summary table. Tables are usually essential to convey the sizeable amount of data in an understandable and clear format. Tables may portray the following:

- Search strategies

- Search terms/databases

- Yield from search strategy

- Total publications

- Relevant publications

- Main features of included studies

- Quality of included studies

- Outcomes

Summary

The discussion or summary paragraphs should present the major conclusions of the review with regard to answering the initial research question. The sources of the evidence should be portrayed with relation to the quality/strength of the evidence to allow you to determine the confidence you can make about the conclusions i.e. the summary needs to be linked to the quality of the primary work. Limitations of the review should be considered such as any biases in the primary data (e.g. publication bias), missing data, search strategy etc. The review should then finish with a summary of the clinical implications of the main review findings before stating the implications for further research.

How to read and appraise a systematic review.

Understanding the methodology and what needs to be done is a good basic starting point for appraising a review, so checking whether the process detailed above and being familiar with the process is crucial. Until relatively recently, not much consideration was given to the appraisal of systematic reviews. However, there are now criteria available to critique these types of studies rather than just blindly accepting them – these criteria such as AMSTAR (Shea et al. 2007) and CASP can be used to help you critique the paper. However, the salient points I will look at in a little more detail using points adapted from Greenhalgh (2010) and Littlewood and May (2013). The appraisal of SR has been made easier due to the PRISMA statement – a standard, structured format for writing up and presenting SR. For an example of a poor systematic review see here.

- Is the clinical question addressed by the review clear and was the method given a priori?

- Was a thorough search of the appropriate databases done and were other potentially important sources explored? i.e. Language bias.

- Was methodological quality assessed and the trials weighted accordingly?

- Were at least two reviewers involved in the study selection/data extraction process?

You will have all written essays and know that when you are trying to synthesise a multiple of papers you go down routes you never intended to, when undertaking a SR the research question needs to be clearly defined and obvious to allow the appropriate papers to be included/excluded or the review will not answer the question it set out to answer.

A thorough search needs to be clearly visible, this may include hand searching, snowballing reference lists and if a meta-analysis is to be conducted, sometimes contacting the lead author for data that may not have been included in the original write up.

As detailed above, aspects of the methodology (study selection/quality assessment/data extraction) need to be conducted by more than one reviewer. Misunderstandings & misinterpretations do occur when extracting data or making a judgement on the quality of included studies; a kappa value may be utilised as previously discussed.